No single vendor can claim that its system is the single source of truth. For the last few decades, I have seen a continuous battle between multiple platforms to set up a vertically integrated system that is capable of managing all aspects of product information, but I think it is time to put some breaks on this verticalization arms race. In the evolving landscape of product development, businesses are increasingly transitioning from traditional siloed approaches to more integrated and dynamic ways to manage information and processes

At OpenBOM, we see the shift is driven by the expansion and development of online services combined with advancements in data management, analytics, and AI. In my article today, I want to focus on two aspects – (1) development of data services and (2) advancements of knowledge graphs. In our vision, these innovations promise to transform the way organizations manage product data throughout its lifecycle, from inception to retirement.

The Limitations of Siloed PLM

Traditionally, product development systems have operated in silos, where data and processes are segmented according to different phases of the product life cycle such as design, manufacturing, and service. PLM and ERP systems represent this approach very well. This approach often leads to inefficiencies including:

- Data Duplication: Information is replicated across different departments, leading to discrepancies and increased storage costs.

- Lack of Visibility: Stakeholders have limited access to data beyond their immediate domain, which can result in delayed decision-making and reduced operational efficiency.

- Inflexibility: Siloed systems are often rigid, making it difficult to adapt to changes in market demands or to integrate with new technologies.

Although vendors were trying to solve these problems by introducing sophisticated integrations and sometimes even establishing strategic partnerships, there is much evidence that traditional data management approaches and single-tenant systems cannot provide an efficient solution. These limitations highlight the need for a more integrated approach, focus on data services, and advanced solutions such as the usage of knowledge graph for creating connected systems and data sets.

Transitioning to Online Data Services

The rise of cloud computing has paved the way for online services that provide comprehensive, real-time access to PLM data across the enterprise. This transition offers several advantages:

- Accessibility: Online services enable data access from any location at any time, facilitating remote collaboration among global teams.

- Scalability: Cloud-based solutions can be scaled up or down based on the needs of the business, allowing for more flexible resource management.

- Cost Efficiency: With online services, companies can reduce costs associated with maintaining physical IT infrastructure and licensing expensive software packages.

At OpenBOM, we set an environment that provides an online flexible data model that can be tuned online in real time to define different data objects and relationships. The data management foundation of OpenBOM allows you to stream information from multiple sources and connect it together without locking data using data collaboration technologies.

In my earlier blog – Moving from tools to data services, I wrote about how companies can move to a distributed (connected) single source of truth by establishing online services. Here is an important passage:

Digital Thread vs Data Piping

Data from multiple tools and repositories must be connected and traceable. This is a Digital Thread for dummies. While the definition is simple, achieving the Digital Thread is harder than it seems. The main reason is actually the need to harmonize multiple repositories and figure out how to stop pumping data between tools and charging everyone for translation. Without doing so, we won’t have a Thread – it will be data pipes. The data is continuously pumped between the tools going from one application to another.

From Tools and Pipes to Data Services

The discussion made me think about an opportunity to simplify the process of digital thread creation. The fundamental difference between the current status quo and the desired outcome will be achieved by requesting all applications to provide a set of data services that can be consumed in real time. Think about the old paradigm of dynamic link libraries and later component technologies. Both allow you to reuse modules and functions rather than implement them again. The same approach can be used by turning all applications into online data services. Once it is done, these data services will become the foundation of the thread connecting them together. However, the data will live inside these services, which will ensure continuity and consistency.

Leveraging Knowledge Graphs

Knowledge graphs represent a significant step forward in handling complex data structures within PLM and other systems representing product data. A knowledge graph is a network of interconnected data entities (products, components, materials, etc.) and their relationships. This model enables:

- Enhanced Data Connectivity: By mapping relationships between different data points, knowledge graphs provide a holistic view of the product data ecosystem.

- Improved Decision-Making: The contextual relationships within a knowledge graph facilitate more informed decision-making by providing deeper insights into product data.

- Innovation: Knowledge graphs support advanced analytics and artificial intelligence (AI), fostering innovation in product development and lifecycle management.

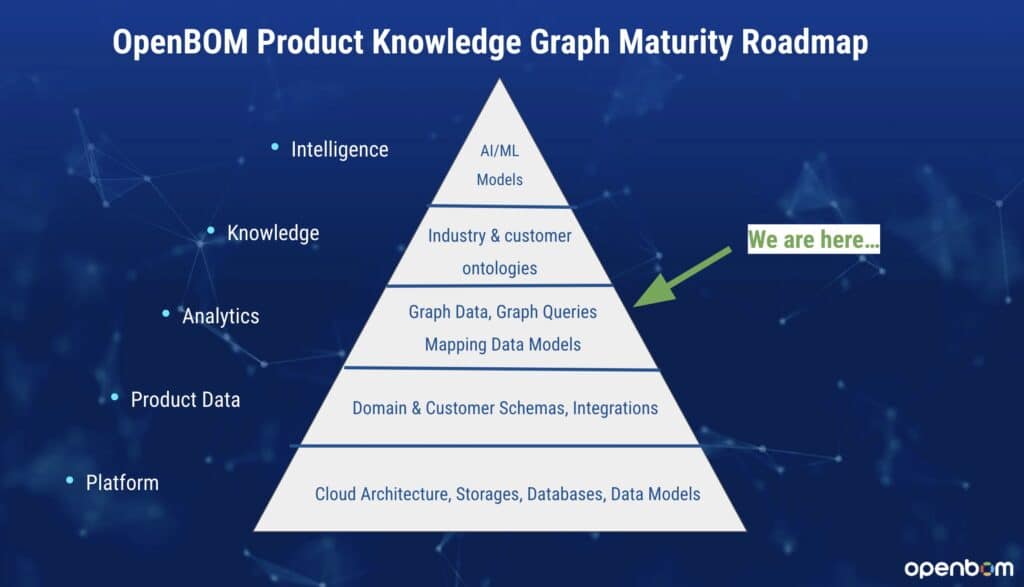

At OpenBOM, we have a vision and roadmap to build a flexible knowledge graph foundation that can be used in product development. Check this article – OpenBOM Product Knowledge Graph Vision and Roadmap

Openness and Data Connectivity

I can see multiple events that confirm our strategic vision towards the development of integrated and connected data management solutions. Here are some examples of solutions and technological advancements to refer to.

Prof. Martin Eigner published an article where he speaks about the conflict between PLM and ERP. Check this out on LinkedIn – The constant Conflict Between PLM and ERP. The article is a “must read” in my view to everyone who is in product development and data management. Here is an example of one of the prototypes that were developed. Check this one and others in the article.

The remarkable part of this example is the usage of knowledge graphs built using standard graph database solutions. This leads me to the next example related to standardization in graph database development – Graph Database, GQL standard, and Future PLM data layers.

What is very important is introducing standards in graph development, which will allow multiple systems to use data services and underlying knowledge graphs. It will allow us to switch from application thinking to data thinking. Here is my passage explaining the importance of this process:

PLM database management system development expands from the usage of relational databases only towards exploring new data model paradigms supported by a large number of new data management solutions. It is important to support complex data structures and provide effective data management for PLM tools. Graphs help to bring relevant data, perform data analysis, and organize database records in a much more flexible and efficient way. Graphs can contribute to the development of master data management and help to establish dynamic and connected single sources of truth for PLM architects. In my view, graph data models and graph databases will play a key role in the future of PLM development, introducing a new level of flexibility and efficiency in product lifecycle data management. The introduction of GQL as an ISO standard is another confirmation of the power of graph database systems and graph models, and also a statement to support the adoption of these models for software developers. It is a note to all PLM data architects – if graph databases are not in your toolbox, now is a great time to close the knowledge gap.

Conclusion

The shift from product data management and PLM silo thinking to integrated online services and knowledge graphs is not merely a technological upgrade but a strategic necessity in today’s fast-paced market environment. By breaking down data silos, enhancing connectivity, and leveraging cloud capabilities, companies can achieve greater agility, better compliance, and improved innovation in their product lifecycle processes. This transition is setting a new standard in PLM, positioning businesses to capitalize on the opportunities of the digital age.

Want to discuss how OpenBOM Graph Data Management can help your company? Contact us today and we will be happy to discuss more with you.

Best, Oleg

Join our newsletter to receive a weekly portion of news, articles, and tips about OpenBOM and our community.