In today’s digital age, artificial intelligence (AI) has become a driving force behind innovation in various industries, from healthcare and finance to transportation and entertainment. AI’s incredible potential lies in its ability to process vast amounts of data and make informed decisions or predictions.

After ChatGPT presented how transformer models can open a way to produce and validate content, companies in different industries are looking at how this power can be used for existing and new industrial processes.

OpenBOM’s vision is to improve decision making using accurate data. In my blog today, I would like to discuss the connection between the current state of PLM software, modern data management approaches, and the foundation of AI development for engineering and manufacturing companies.

The current state of PLM technologies

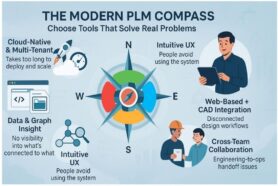

For the last 20 years product lifecycle management software was developed as a technology and platform to support design and engineering processes. The core of these PLM platforms and systems is a flexible object data modeler using an RDBM system (eg. SQL server or Oracle). This technology is mature and scalable but has its limitations.

Here are the top three technological limitations of existing PLM platforms from my article – The Multi-level complexity of engineering product data.

- The majority of existing production-level CAD systems are desktop systems using file management systems to store information. The document is a foundational element of engineering design, which brings a substantial level of complexity because it limits the granularity of data access, data management, intent, and impact analysis.

- The majority of existing production-level PLM systems are SQL-based client servers and sometimes web-based architectures with RDB-based servers. These systems were designed back in the 1990s when the methodology of object-data management was used to create an abstraction level for flexible data management used in all existing major PLM software platforms today. The computation capabilities of these systems are limited to the capabilities of RDB/SQL and the abstraction model is limited to a single tenant (company) level.

- Integration capabilities of existing major PLM software tools are limited to proprietary APIs and limited development of SOAP/Web services interfaces. Customization and integration best practices are relying on direct access to the database and (again) using SQL for data extraction and processing. Moreover, the business models of vendors are relying on data locking and selling vertically integrated solutions.

Existing CAD, PDM, and PLM technologies accumulated a large amount of data about products including CAD models and drawings, bill of materials, and all other related data that are connected and located in PLM systems and other resources – databases, legacy data sources, and other business systems.

The Foundation of PLM AI: Product Data

AI relies heavily on data. It is critical to understand that AI potential can only be fully realized when AI systems are created with accurate and high-quality data. In fact, data is often referred to as the “fuel” that powers AI algorithms. These algorithms use data to identify patterns, make predictions, and improve their performance over time.

By analyzing existing data, the model learns the patterns and relationships in the data. If the data contains errors or inaccuracies, the model will pick up on these and may make incorrect predictions or decisions. For example, in a supply chain, inaccurate BOM records, sourcing, and vendor information could lead to incorrect recommendations about the right suppliers and costs.

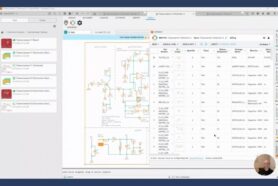

Accurate data ensures that AI models learn from reliable information, improving their ability to make accurate predictions and decisions. When an accurate bill of materials with all components is fed into an AI system, it can identify the probability of the presence of a specific component and identify what parts are lacking in a BOM that is prepared for the release. It can help identify mistakes and inconsistencies in a specific product design, which by itself can lead to improvements in the product design.

PLM and Invisible AI

The appearance of AI in different products can be different. Until the introduction of ChatGPT, many people considered AI as a separate system that can be “used” and take actions instead of people. However, ChatGPT and other examples of generative AI implementations triggered industry people to think about different appearances of AI in existing products.

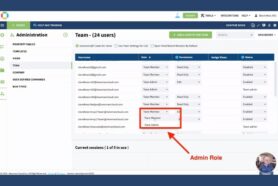

In my earlier article this week – OpenBOM AI: It all starts from data, I share the ideas and also examples of how OpenBOM technological foundation can become a platform to organize information to collect accurate data to feed AI systems.

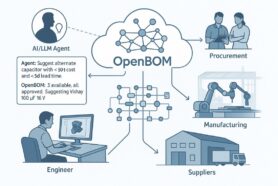

The core foundation of OpenBOM is a data management technology focused on how to gather product information from multiple systems, combine it, get everyone in the company to work on this collaboratively, and then push to the best mechanisms to create LLM (Large Language Models) to be used for decision making processes in OpenBOM and other tools.

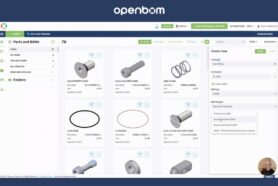

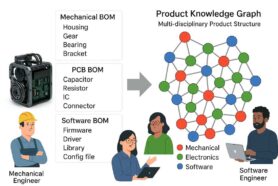

The foundation of OpenBOM’s platform technology is a multi-application and multi-tenant product knowledge graph, built using modern data management technologies and polyglot database architecture. The foundation of this graph is stored in a graph database.

The use of a graph database enables the creation of a robust data foundation for AI applications. OpenBOM’s ability to integrate and centralize data from various sources is an important element of the data platform. By connecting to all BOMs and related data, OpenBOM ensures that manufacturers have the necessary data to build and deploy AI solutions effectively.

We are experimenting with multiple generative AI scenarios where the ability to generate or validate content built from existing data can become a complementary use cases to existing PLM systems. One of them is BOM co-pilot to allow users to predict (generate) a bill of materials based on specific product characteristics or to validate an already created bill of materials to find mistakes in a new design.

So, PLM AI is not some futuristic humanoid robot works instead of people as you may think. The future PLM AI is an invisible technology that allows working more efficiently with existing PLM tools, providing recommendations or validation (eg. cost or supplier selection options, finding missing parts or fixing manufacturer part number mistakes) during the product development, review or approval process.

Product Knowledge Graph (Multi-Application and Multi-Tenant)

Getting accurate data and product AI models is a recipe for making AI available. The essential step in this process is to develop a model to represent product information and to collect product information from existing PLM systems and other data sources. This brings us to the graph models and knowledge graphs as a technology to allow to contextualize AI technologies for product development, engineering and PLM. Check some of my earlier articles:

Why Do You Need A Graph Model To Embrace PLM Complexity?

The importance of knowledge graph for future PLM platforms.

Below I put three reasons how knowledge graph and graph databases will become an underlying technological stack to fuel the future of PLM AI.

- Graph technological stack provides a foundation for PLM data management and analytics, and supports collection of heterogeneous product data collected from multiple applications and customers. This model has much fewer constraints compared to existing PLM SQL databases.

- Graph data science algorithms combined with specialized product data models allow to analyze relationships of connected data and provide a foundation for machine learning and LLM models to be used for generative AI. It allows you to make better predictions with the data you already have.

- Multi-tenant data management platform and integrations with multiple applications allow to collect data from multiple sources and use a graph-based OpenBOM data model to develop specialized graph aware applications using AI algorithms.

Conclusion:

Accurate data is the cornerstone of successful AI development. It ensures that AI models are trained on reliable information, leading to precise and trustworthy outcomes. As AI continues to evolve, AI technological vendors will provide generic tools and frameworks for LLM and other AI model development. The key element in AI contextualization for PLM tools and use cases will be providing a model and tools to build an accurate data set for a collaborative and integrated process of data capturing in existing manufacturing and product development environments. Accurate product data is a linchpin of AI success for manufacturing companies. It won’t happen overnight. Companies will have to develop tools and applications to integrate the process of data capturing and turning them into future PLM intelligence.

REGISTER FOR FREE and learn what tools OpenBOM provides today to optimize your working processes, collect accurate product data, and creation of your future AI tools.

Best, Oleg

Join our newsletter to receive a weekly portion of news, articles, and tips about OpenBOM and our community.