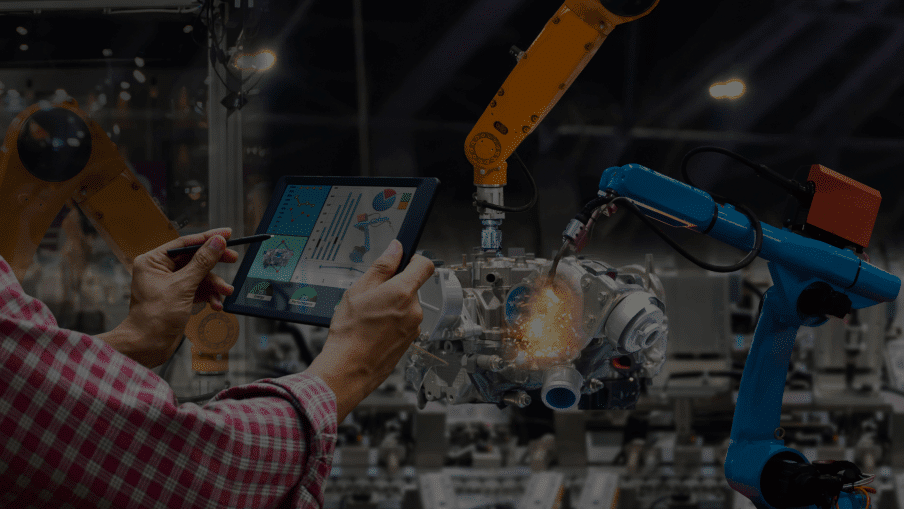

There’s a lot of excitement right now about Large Language Models (LLMs). From writing code to generating marketing copy, these models are quickly becoming part of the daily workflow for many teams. If you’re in the business of building physical products—hardware, electronics, machines—it’s natural to start wondering: Could AI help us build better, faster, and smarter too?

We think the answer is yes—but not in the way traditional PLM systems would have you believe.

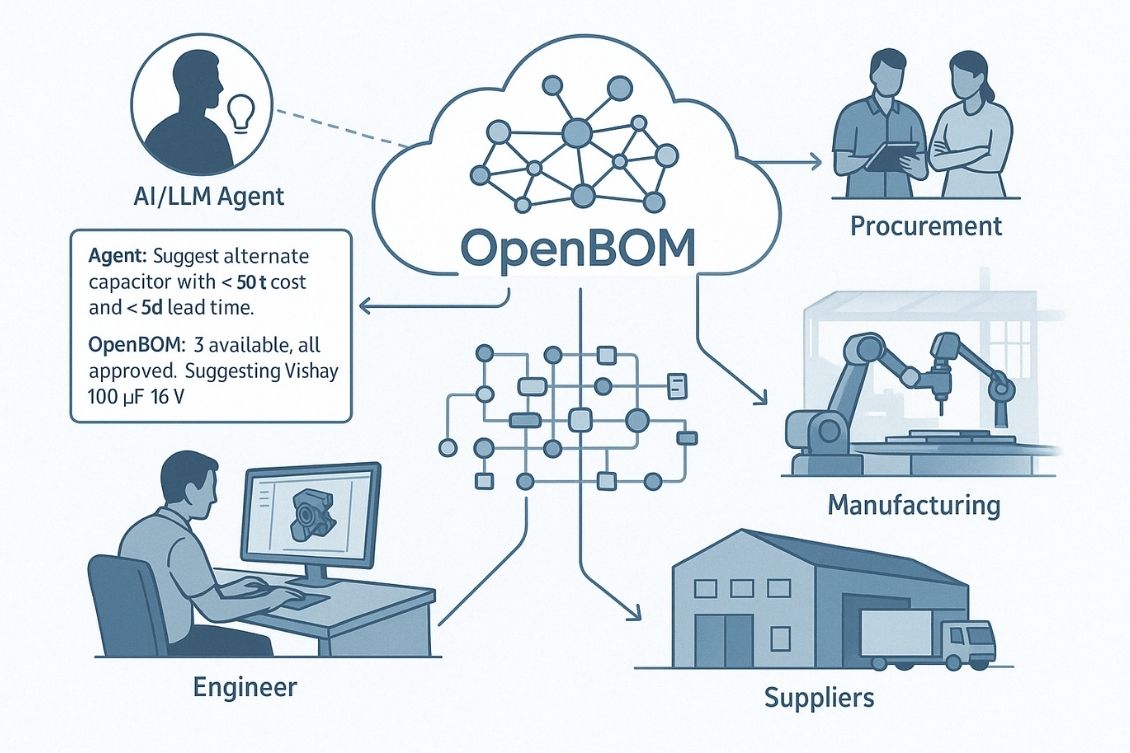

At OpenBOM, we’re exploring a different path: combining the structured intelligence of a Product Knowledge Graph with the generative and reasoning power of LLMs. It’s early days, but we believe this combination could fundamentally change how engineering and manufacturing teams work with product data.

LLMs Are Brilliant—but They Need a Ground to Stand On

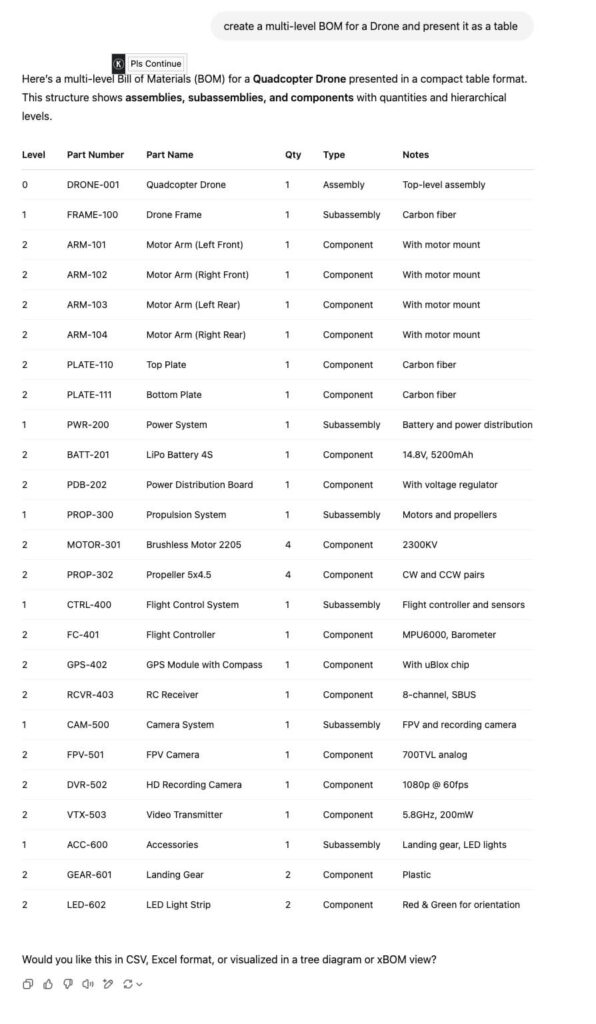

Ask a modern LLM to create a multi-level BOM for a drone, and it can give you a surprisingly thoughtful output. Ask it to explain the difference between alternates and substitutes, or even convert a tabular BOM into JSON or XML—it’s got you covered.

For the purpose of this discussion, I ignore the questions about who owns the model and how to create a BOM based on the specifications and previous models of drone your company has developed – only focusing on the technological capabilities and interactions between data models.

What LLM can’t do is remember that you already made changes to the battery assembly last week. It doesn’t know that component XYZ is approved for aerospace use, or that supplier ABC just raised their prices. It also has no concept of revision control, configuration management, or compliance workflows.

That’s because LLMs operate in a stateless, session-based world. They’re incredibly powerful—but they are not connected to the lifecycle and other specific operations and tools that are needed for engineering and manufacturing tools.

And in hardware development, context is everything. Knowing what revisions of a part you’re working with, understanding the relationship between assemblies, making impact analysis and where used queries, and ensuring traceability across sourcing, production, and support—that’s not optional. That’s the foundation.

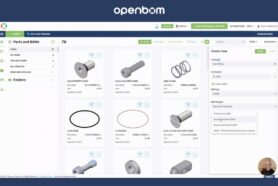

OpenBOM: A Product Graph With Memory, Structure, and Meaning

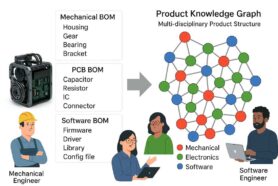

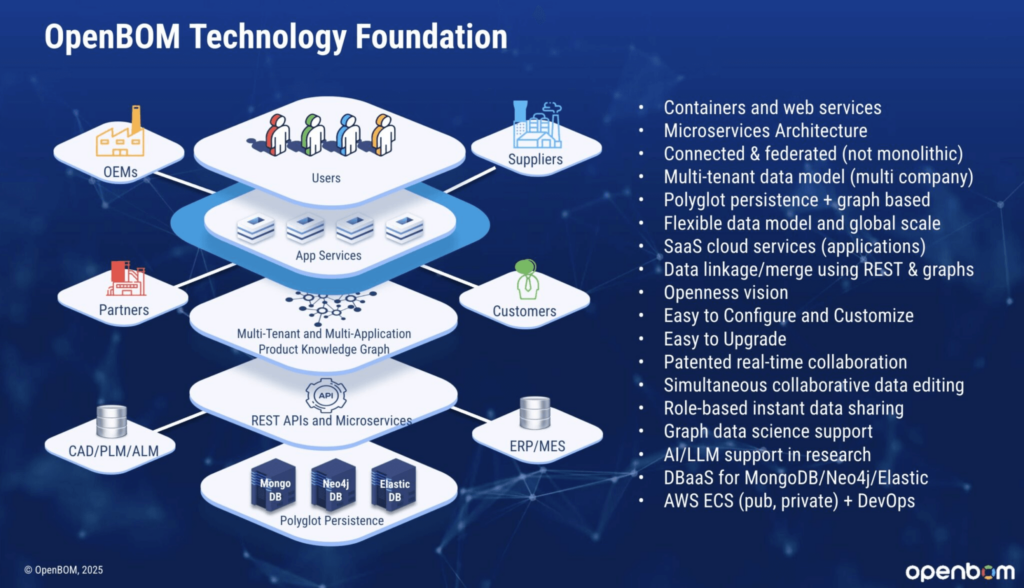

This is where OpenBOM Product Knowledge Graph and Collaborative Workspace capabilities fit in.

OpenBOM wasn’t designed as just another spreadsheet alternative. It’s built around a flexible, multi-tenant data management foundation that represents parts, assemblies, relationships, suppliers, revisions, and all the metadata that connects them. It’s a living model that understands the structure of a product and the lifecycle it goes through. It has a user interface that allows engineers and everyone in manufacturing companies to work together.

Unlike LLMs, OpenBOM persists data over time. It tracks how components are used across multiple designs, maintains historical revisions, manages alternates and substitutes, vendors, suppliers and integrates with CAD, ERP, and sourcing tools. The system brings order to the creative chaos of product development.

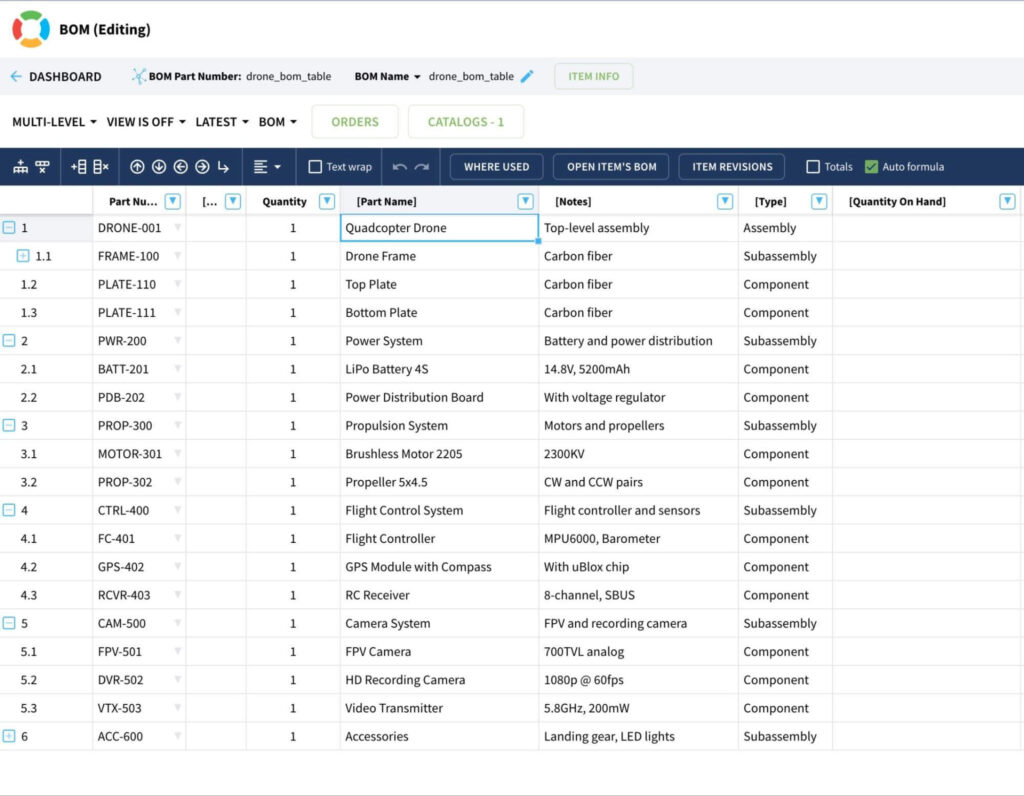

And when you stream the results of LLM output to OpenBOM product knowledge graph, things start to “click” together.

And when you combine that with an LLM’s ability to generate, reason, and communicate—you start to see something new emerge: an intelligent assistant that not only suggests things but knows how to act on them in a meaningful, governed, and connected way (I won’t be able to cover it in a single post, so please stay tuned).

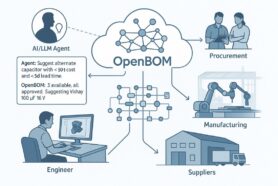

A Shared Collaborative Workspace for AI Agents and Humans

Let’s imagine what that looks like in practice.

You describe a new subsystem in natural language—“a lightweight payload pod with built-in cooling and Wi-Fi telemetry.” The LLM interprets the request and drafts a multi-level BOM structure with candidate components and estimated costs.

That data flows directly into the Product Knowledge Graph. Now it’s not just an idea—it’s structured. It’s versioned. It’s tied to suppliers and costs. It can be reviewed, revised, and routed to production. The LLM can come back and suggest design alternatives or notify you of sourcing risks, but the information lives in a real, connected system that knows your product.

It’s not magic—it’s just combining the best of both worlds. A system with structure and memory, and an assistant with reasoning and language.

Multi-Tenant Foundation: One System, Many Agents

Here’s a technical detail that makes this vision even more powerful: OpenBOM’s multi-tenant cloud architecture.

Because OpenBOM is built from the ground up to support multi-tenant data foundation, it’s perfectly positioned to support multiple AI agents working across different contexts with a very low footprint and connected together. Want to spin up a BOM workspace for a new agent? No problem. Want to sandbox an experimental product concept with an AI co-pilot before promoting it to the main environment? You can do that too. OpenBOM platform serves as a multi-tenant agent foundation to organize product information.

Each workspace can serve a specific product, project, customer, or even LLM agent persona—with access permissions, data scopes, and collaboration models that scale up or down depending on the need.

This approach makes it incredibly easy to prototype intelligent workflows. You can:

- Create a digital assistant for a specific product line.

- Spin up a sourcing co-pilot trained on your preferred vendors.

- Let different agents support different departments—engineering, purchasing, QA.

It’s not just about automation. It’s about enabling intelligent agents to work with real data in a real product lifecycle system.

We’re Experimenting and You’re Invited

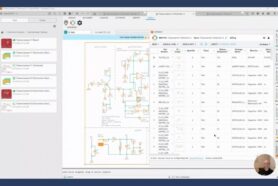

We’re not just theorizing. At OpenBOM, we’ve already started experimenting with early scenarios that combine LLMs and our product knowledge graph.

Imagine a few scenarios – ask GPT-4 to generate a BOM structure for a sample design. Then feed that structure into the OpenBOM database, validate it, link real part numbers and costs, and use it to simulate a production run. We added AI-generated supplier recommendations, lead time flags, and even a draft email to request quotes. All driven by LLM, but grounded in real product data.

In my earlier blog – How to build a “Zapier for Engineering”, I explored the idea of using workflow tools to coordinate multiple engineering tasks and integrate between tools. Technologies like MCP (Model Context Protocol) which was introduced just a few months ago, provide a promise of how tool will be integrated.

This is just scratching the surface.

We’re now looking for forward-thinking manufacturing teams who want to experiment together—co-develop use cases, run pilot workflows, and explore what’s possible when structured product data meets intelligent reasoning.

If you’re curious, let’s talk. We’re building this future with openness and collaboration at the core. We will make more experiments and the first versions of the tool available on OpenBOM platform very soon.

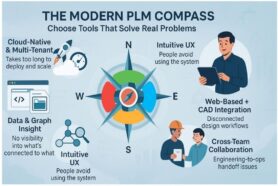

Conclusion: Next Generation of PLM

The old world of PLM was about control, rigidity, and trying to make creative teams conform to legacy workflows. Most of those PLM tools are either on-premises tools or single-tenant hosted systems with a heavy footprint and cost to run.

The new world is about flexibility, intelligence, and systems that adapt to how people actually work. A combination of agentic AI protocols and capabilities to instantiate and run OpenBOM agents and leverage integration with engineering tools will unlock many capabilities that are today performed by teams again and again via import, export, copy, and email files.

With LLMs, we now have tools that can understand and respond to natural language. With OpenBOM, we have a product graph that knows the structure and history of your design. Put them together, and you get something entirely new: a platform that can reason, adapt, and collaborate with you—not just store your files.

This isn’t about replacing PLM. It’s about rethinking it—from the ground up—with the tools we finally have available.

We’re excited about the direction. We’re experimenting actively. And we’d love to explore it with others who are thinking the same way.

The future of PLM is more open, more connected, and more human.

Interested in discussing more? Contact us or REGISTER FOR FREE to check how OpenBOM can help you already today.

Best, Oleg

Join our newsletter to receive a weekly portion of news, articles, and tips about OpenBOM and our community.