Earlier last week, I was writing about what differentiates a typical legacy PLM system from a modern data platform capable of providing intelligent insight into the data and relationships between various data elements of product lifecycle management. Check my blog to read more. In a nutshell, a typical legacy PLM system is focusing on how to move data from one place to another while trying to do so in the most efficient way. Typical engineering scenarios on the top of PLM use cases are related to engineering change management and release process, pre-release data management, and product data management. It is all about efficiency, but before moving forward, I want to talk about the topic that recently drove my special attention – effectiveness vs efficiency.

Effectiveness vs Efficiency – What Comes First?

These two terms are often conflicted and confused for many teams. As Peter Drucker put it in his book, effectiveness is all about doing the right things, while efficiency is more about how doing things right. Both require the measurement of the results and outcome and what needs to be done. An efficient process will always focus on how to streamline the operation, collaboration, data sharing, and communication. It is about making the process not too stuck and how to make data available when it is needed. Sounds very much like a traditional PLM system to me. It is all about priorities progress, results, and targets (eg. ECO turnaround time). In other words, an efficient team will focus on how to get maximum output with the least amount of effort and time.

Things look differently when effectiveness comes first. It requires using resources and data to get an understanding of what needs to be done to get the best results. Effective processes or systems will help you find the place or activity or solution that will lead to the best decision or, in other words, focus first on doing the right things and only after focusing on how to make them faster (more efficient).

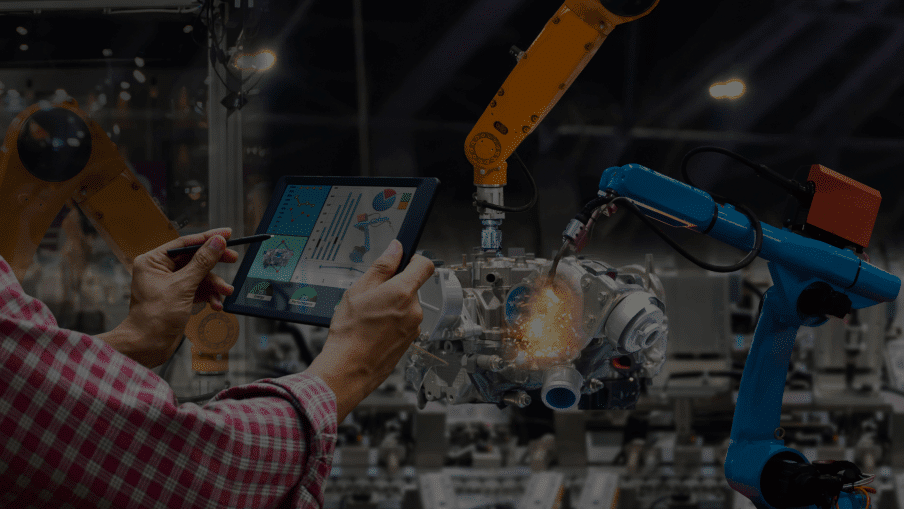

Extending from process streamlining to a decision support system

For years, PLM systems were implemented to make things work efficiently. Making file releases, storing data about the product, and simplification of revision control, support the change management process, PLM vendors were focused with speed and productivity while organizing the most efficient process. Cloud PLM (or SaaS) didn’t do much difference in PLM systems. Most vendors understood the cloud simply as a way to deliver the same PLM system, faster, cheaper, and with less effort. Upgrade of PLM systems to the next revision, which for years was the biggest PLM challenge is actually also an element of efficiency.

What is heavily overlooked are tools that can help us to make the right decision or, in other words, to decide what to do first. Such tools are not focusing on how to do revision sign-off or ECO approval faster, but first to decide that change is needed and what is the right change. A decision to replace a supplier from a bad supplier to a good supplier, selecting a specific vendor or component, and instant calculation of cost based on the design decision, can turn things upside down.

The paradigm change for PLM systems can be a switch of the focus from data management and process streamlining to artificial intelligence, data models, machine learning, and specific database queries applied for the decision support system to make engineering and company activities focused on what is right to do.

3 Things OpenBOM Does Differently To Build A Decision Support System

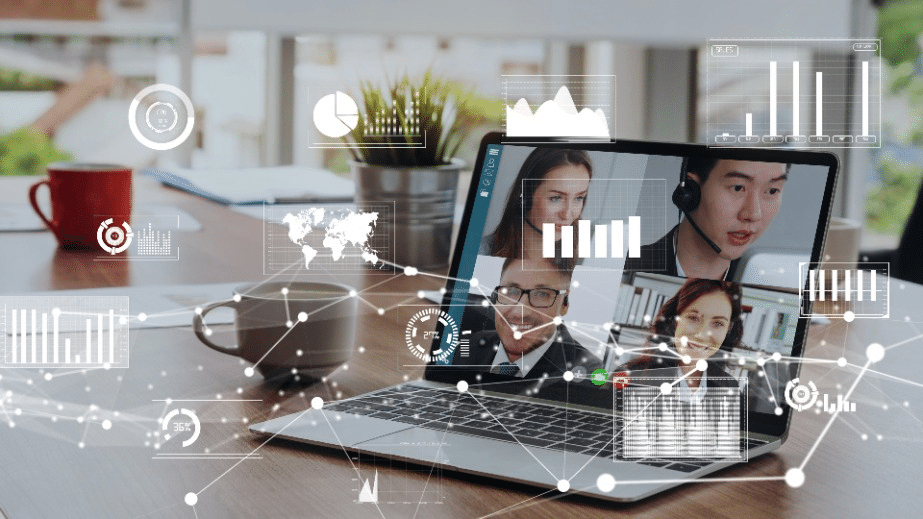

At OpenBOM we focus on the data first and the ability to bring data from multiple sources, companies, and products to provide data insight and analytics. Combined together they can produce results and help companies to focus on doing the right things. Here are 3 things, OpenBOM does differently from other PLM systems focusing on process improvements only.

- Flexible multi-tenant data management platform

- Seamless data integration from multiple data sources

- Data science and analytics

Flexibility is crucial to be able to manage complex data about the product. Many legacy PDM/PLM systems as well as their young “SaaS brothers” are compromising on data flexibility by creating an OOTB (out-of-the-box) process. OpenBOM first provides a multi-tenant data management system capable to change.

Integrating with many systems and online sources is the second differentiation. While many PLM systems are focused on how to build a single source of truth by controlling all aspects of the data management process and data mapping, OpenBOM makes integrating data from multiple systems a priority.

OpenBOM data management platform is using graphs and networks as a more advanced data management paradigm. OpenBOM data management foundation is built on top of Graph Database (Neo4j), which allows applying many capabilities of the platform such as Graph Data Science and others methodsto for decision support. Many OpenBOM features are focusing on data analysis (eg. Cost Rollup). It allows for OpenBOM to rethink the entire PLM implementation business and help customers to bring an important data to help making every decisions more intelligent.

Conclusion:

OpenBOM data management and analytics capabilities are a growing trend in the way PLM systems can switch from a traditional process improvement of productivity and process efficiency to becoming a data management center to support decision-makers with the right information, insight, calculation, and data points. While these are still growing trends, I expect manufacturing companies to start coming up with questions about “what are the right things we need to do” and looking for systems like OpenBOM to provide them with the answer.

REGISTER FOR FREE to use OpenBOM and learn how it can help you today.

Best, Oleg

Join our newsletter to receive a weekly portion of news, articles, and tips about OpenBOM and our community.